The Human Core of Mental Health Care

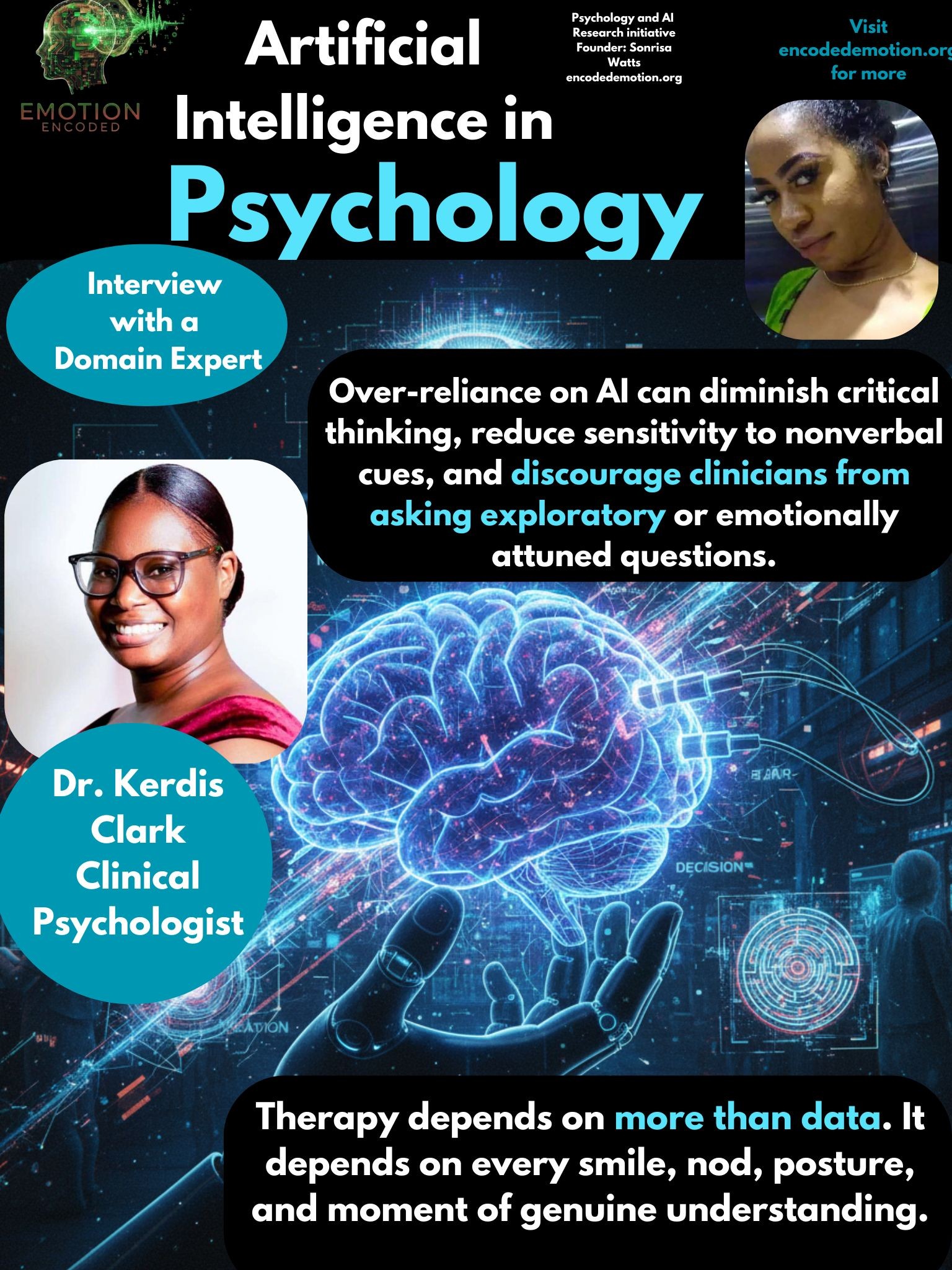

Dr. Clarke on AI, Empathy, and Clinical Judgment

A Conversation with a Nevisian Clinical Psychologist and Founder of **Respite**.

Dr. Clarke, a clinical psychologist from Nevis and the founder of Respite, offers a grounded and deeply human perspective on the evolving role of artificial intelligence in mental health care. Her views balance openness to innovation with an unwavering commitment to ethical responsibility and human connection.

AI as an Adjunct, Not a Replacement

From the beginning, Dr. Clarke makes her stance clear. She states, "I am not entirely opposed to the integration of technology into mental health care and AI-based tools are no exception." She believes AI can have meaningful value when used with the correct intention and boundaries.

She emphasises that these tools should not replace human clinicians. "If one understands that these tools are not intended to replace specialised clinical support, their use can be appropriately and cautiously considered." In her view, AI can guide, support, and complement clinical work, especially in areas that often consume a clinician’s time.

Dr. Clarke notes, "A significant part of mental health care involves administrative responsibilities such as documentation and reporting." For these tasks, she sees AI as an asset. It can generate summaries, draft templates from therapy notes, and produce psychoeducational materials that enhance patient understanding.

Why Clinicians Hesitate to Trust AI

Dr. Clarke acknowledges that many clinicians remain uneasy about relying on AI systems, even when the data appears accurate. She explains, "This hesitation stems from the ethical and professional responsibility to exercise sound clinical judgment and safeguard patient welfare."

For those trained in more traditional eras of clinical work, AI can feel alien to the emotional core of psychology. "Reliance on AI may feel impersonal or even dehumanising," she says, describing how some clinicians fear the potential harm that could result from overreliance on non-human recommendations.

This concern, she explains, reflects a deeper emotional instinct. "This emotional and ethical tension reinforces the instinct to maintain professional autonomy and human accountability in clinical practice."

The Real Risk of Over-reliance

While AI can be helpful, Dr. Clarke believes there is a genuine danger if clinicians become too dependent on its recommendations. She explains, "The extent of this risk depends largely on the individual clinician’s personal ethics, integrity, and professional discipline."

She compares blind reliance on AI to rigidly following diagnostic manuals without critical thought. "It can diminish critical thinking, reduce sensitivity to nonverbal cues, and discourage clinicians from asking exploratory or emotionally attuned questions."

Most importantly, AI still lacks cultural and emotional depth. "AI systems may not yet fully account for cultural context or subtle interpersonal dynamics." While AI can analyse surface-level data, she reminds us that it "cannot capture or interpret the raw emotional experience of a patient in the same way a human clinician can."

Empathy Cannot Be Automated

Dr. Clarke’s most powerful insights center on empathy and the therapeutic relationship. She describes human connection as the heart of effective mental health care: "Human connection is the essence of psychology and the foundation of healing relationships."

She explains that empathy is not optional. "Empathy, warmth, and presence are vital to fostering trust and psychological safety."

While AI can help with reminders, progress tracking, and educational content, she is firm that it will never replicate true therapeutic presence. "Every smile, nod, posture, and moment of shared understanding contributes to the therapeutic experience in ways that algorithms simply cannot emulate."

A Careful but Open Future

Dr. Clarke’s reflections show that the future of mental health care is not about choosing between humans and technology. It is about combining the strengths of both while protecting the qualities that make therapy human.

Her message is clear and steady.

- AI can support.

- AI can summarise.

- AI can educate.

But healing requires a human being.