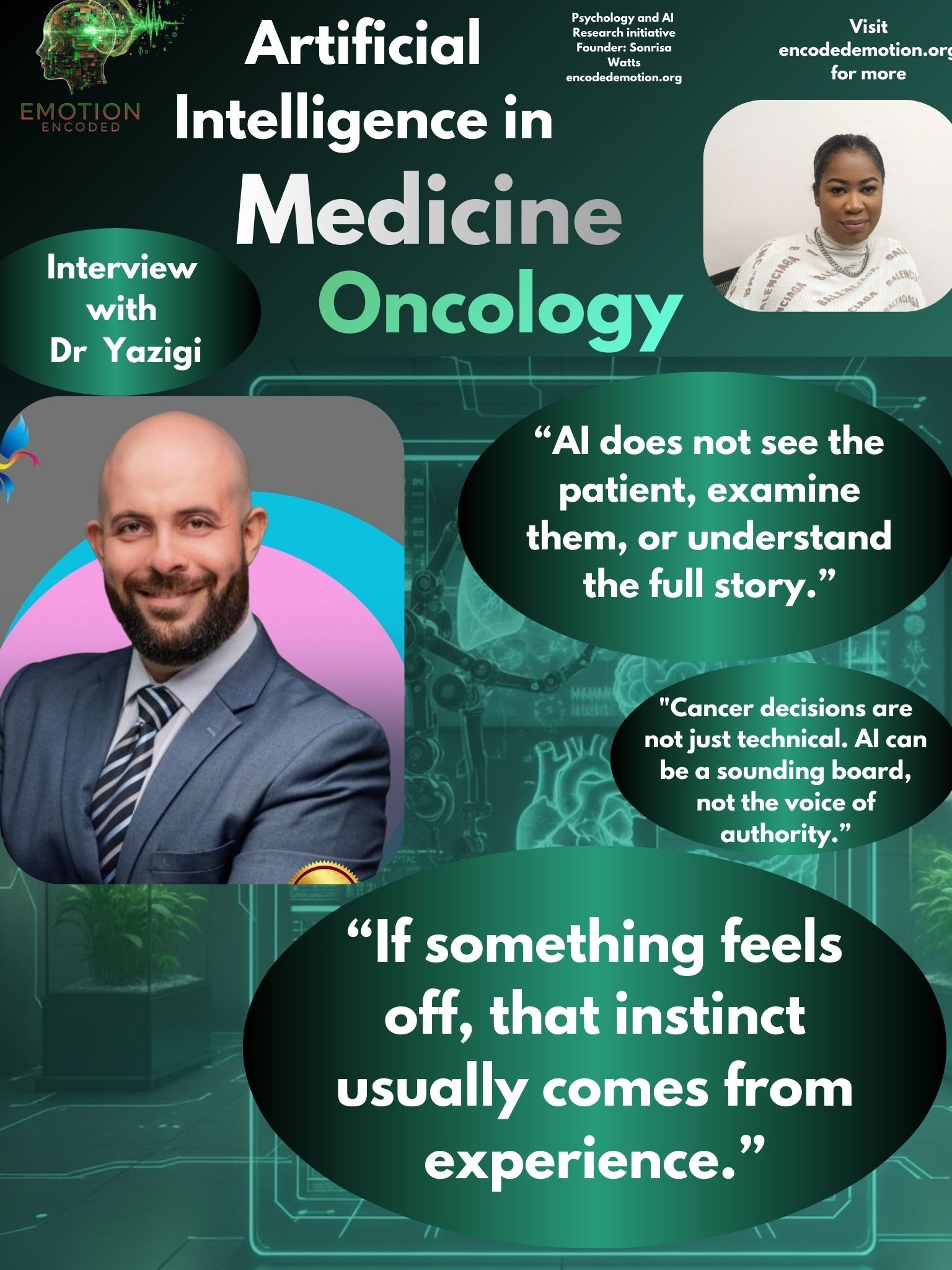

When AI Meets Oncology:

Interview with Antiguan Oncologist Dr. Hanybal Yazigi

Artificial intelligence is becoming more common in cancer care. It is used for research, predictions, workflow support, and clinical suggestions. But as these systems grow more advanced, one question remains: What role should AI play in decisions that affect real people facing life-changing diagnoses?

In this interview, oncologist Dr. Hanybal Yazigi shares a grounded perspective. While he sees value in AI, he believes it should always remain a support tool, never a decision-making entity.

Trusting Judgment Over Algorithms

When asked what he does when an AI recommendation feels wrong, Dr. Yazigi is direct:

He explains that AI can be helpful, but it lacks what truly matters in patient care:

For him, being a physician is not just about data. It is about understanding people: “As physicians, we integrate far more than just data. We integrate the patient’s history, comorbidities, social context, preferences, and subtle clinical clues.”

And when something feels wrong, he trusts that instinct: “If something feels off, that instinct usually comes from experience, and I would always prioritize that over an AI suggestion."

Should AI Ever Act Alone?

Some people lose trust in AI after one clear mistake. Dr. Yazigi sees the issue differently: “I wouldn’t rely on AI to manage a case independently in the first place.”

For him, AI should always remain in a supporting role: “In my view, AI should always be used as a supportive tool, not as a decision-maker.”

He believes every AI output must be questioned and verified: “Any suggestion it provides should be reviewed, verified, and researched by the physician before being applied to a real patient.”

His advice is simple: “Never take an AI recommendation at face value.”

What Must Always Stay Human

When asked what decisions in cancer care should never be handed over to AI, he did not hesitate: “Everything basically.”

He explains: “The overall diagnosis, treatment, management strategy and goals of care must always remain human. These decisions are not just technical. They are deeply personal, ethical, and emotional. They require understanding the patient’s values, fears, family situation, and wishes.”

Because of this, he believes AI can never replace a doctor:

Where AI Truly Helps?

Dr. Yazigi does see a real place for AI in medicine: “I do think there is a valid place for AI.”

He uses it to save time and mental energy: “In my daily practice, I use AI to optimize time and workflow. It can help point toward relevant literature or act as a sounding board when a diagnosis is difficult.”

But he draws a clear line: “It should never dictate how a patient is managed. The final responsibility medically, ethically, and morally must always rest with the treating physician.”