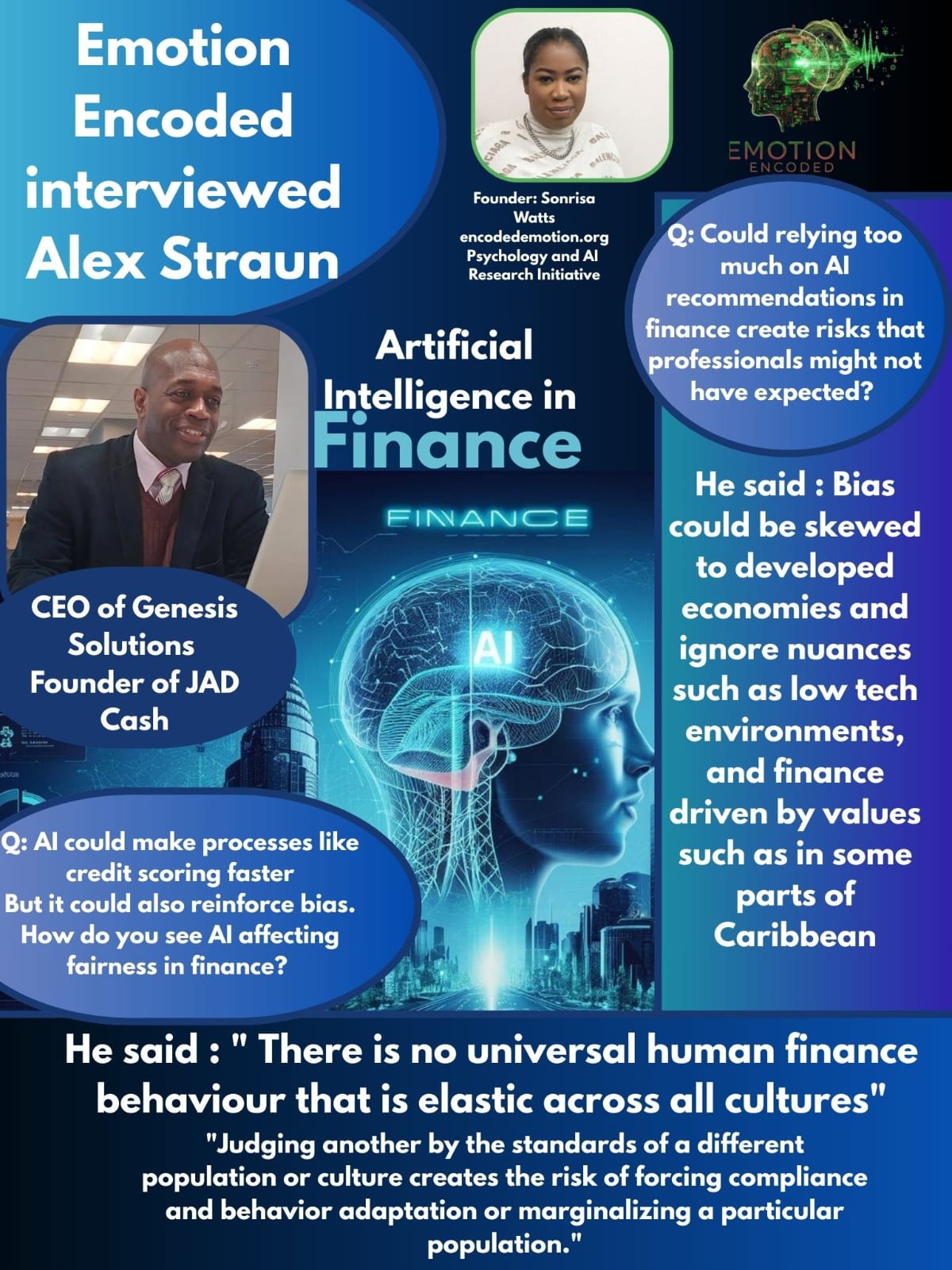

In the fast-paced world of financial technology, a common narrative prevails: AI is the future, a perfect oracle of data-driven truth. Yet, a conversation with financial professional Alex Straun reveals a more complex, and cautionary, perspective. His insights move beyond the hype to expose a fundamental paradox. The very strengths of AI are also its most dangerous blind spots. Straun argues that without a healthy dose of human skepticism and a deep respect for cultural context, AI won't just automate finance. It will inadvertently institutionalize injustice.

The Myth of Blind Trust

The first and most critical point Straun makes is that trust in AI should never be absolute. In his view, a professional's trust in an algorithm should be no different than their trust in a human colleague, a relationship built on healthy skepticism and continuous validation.

"Professionals should always develop a state of healthy skepticism. Any AI recommendation should be viewed and validated against known truths and benchmarks. Just as humans can make mistakes because of limitations of understanding so too can any system trained to automate the process. Trust in any AI tool should never be blind."

This is not a simple warning against a single error. It's a fundamental challenge to the prevailing belief that AI, because it is "data-driven," is immune to human fallibility. Straun suggests that both humans and AI are susceptible to "limitations of understanding." In a system where billions of dollars can be moved in an instant, a catastrophic mistake is less about a single bug and more about the collective failure to question a system's core assumptions.

The Deceptive Power of Patterns

Straun recognizes the undeniable power of AI in fields like fraud detection and risk prediction, acknowledging that they are "perfectly poised to analyze and detect such patterns." This is the widely accepted benefit of the technology; its ability to see connections invisible to the human eye.

However, Straun says that the patterns AI identifies are not universal truths; they are products of their environment. This is where he introduces the critical concept of automation bias.

"Bias could therefore be skewed to developed economies and ignore nuances such as low tech environments, and developing or finance driven by values such as some parts of Latin America, Africa, the Caribbean and Sharia Compliant Finance."

He highlights that a model's bias isn't necessarily a malicious defect; it's an inherent consequence of the data it's trained on. An algorithm designed for Wall Street, for example, is not equipped to understand the financial behaviors or value systems of a community-based lending circle in Latin America or a Sharia-compliant system.

Codifying a One-Size-Fits-All Injustice

Straun’s final point synthesizes his entire argument into a powerful critique of global financial models. He directly challenges the notion of a "universal" approach to credit scoring, a process that AI is rapidly automating.

"There is no universal human finance behavior that is elastic across all cultures and environments. Therefore, judging another by the standards of a different population or culture creates the risk of forcing compliance and behavior adaptation or marginalizing a particular population."

This statement is a stark warning. By applying a single, globally-scaled model, we risk "forcing compliance" to a dominant cultural standard, effectively punishing those whose financial habits don't align. This process doesn't just create bias; it actively marginalizes populations and codifies an inherent injustice into our financial systems. Straun's proposed solution is as simple as it is radical: train models on "large data sets of historical behavior of the population that it is meant to govern."

Ultimately, Straun’s insights serve as a powerful reminder that AI is a tool, not a perfect oracle. His nuanced perspective moves beyond the hype and delves into the ethical and cultural dimensions that are often overlooked. The true challenge for the financial world isn't merely to build faster, more accurate algorithms, but to ensure these systems are designed with a deep respect for human diversity and context. It is a call to action for developers and professionals alike: to prioritize the integrity of the data and the fairness of the outcome, ensuring that the future of finance remains rooted in empathy and sound judgment.