Status Quo Bias: Why Organisations Resist Change

When asked why established organisations often cling tenaciously to their old, legacy systems rather than embracing modern AI infrastructure, Mr. Inanga pointed to fear as the primary, insidious driving force.

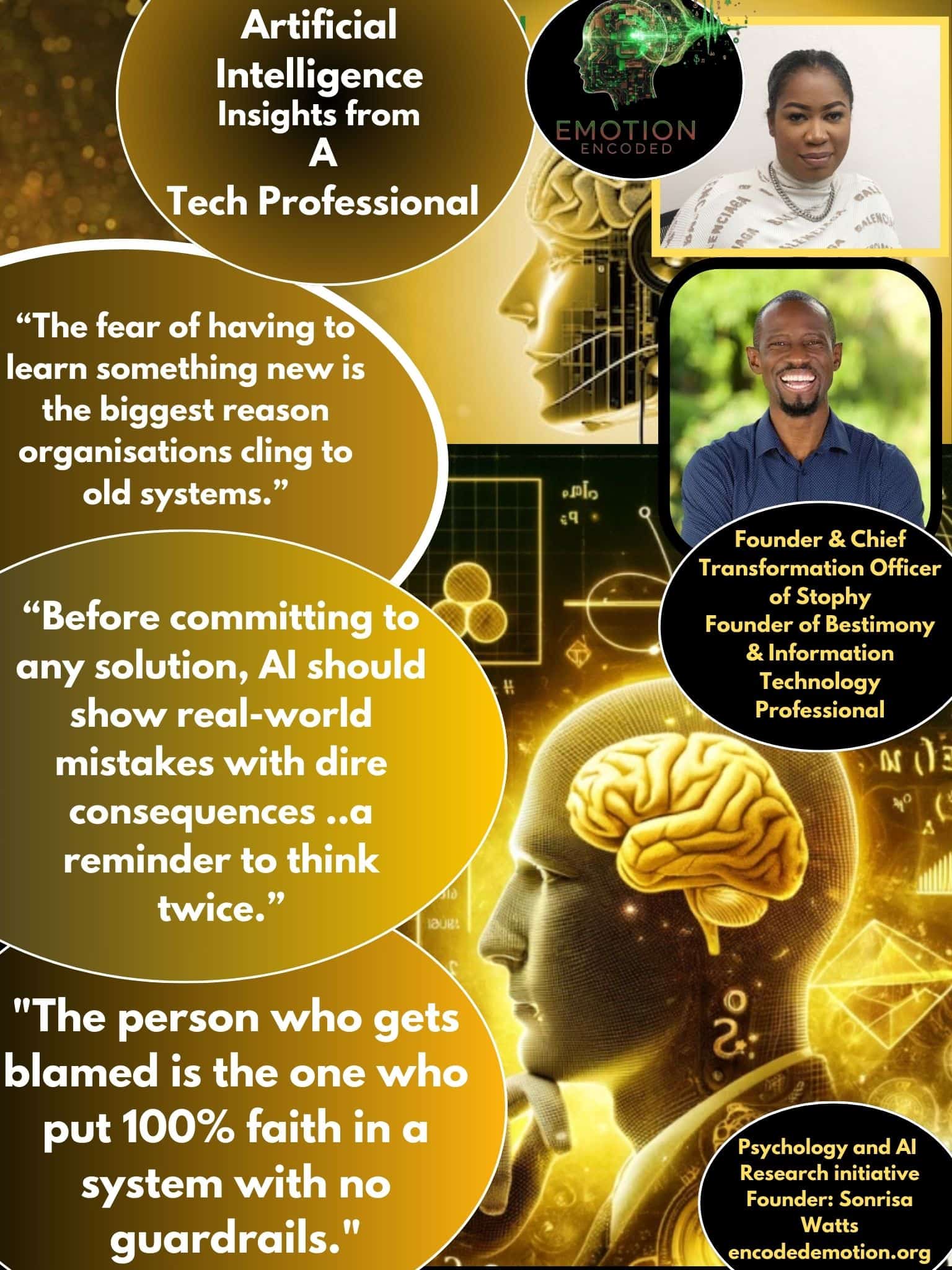

“The fear of having to learn something new. Adapting new tech and methods requires a fundamental shift in company culture, habits, and established workflows. This transition can be really scary for people, even for those who are considered tech savvy.”

This resistance is often misunderstood as purely technical debt or a data hygiene problem. As Mr. Inanga clarifies, the core issue is the human difficulty of leaving behind the familiar and the psychological cost associated with steep, complex learning curves and cultural change management.

Algorithm Aversion: Who Gets Blamed?

The question of liability is paramount: What happens when an AI makes a harmful, potentially fatal mistake in a hospital or delivers a biased ruling in a courtroom? According to Mr. Inanga, blame is not easily outsourced to the opaque system.

“The person who gets blamed is the person who chose to put 100% faith in a system that had NO guardrails to ensure the validity of the data or the integrity of the decision. The fear of liability does play a very HIGH influence in the rejection of AI and new tech. If AI can mess up with the simplest of situations, there is no telling the catastrophic consequences of messing up when the stakes are higher.”

His argument suggests that a fear of being held legally and professionally accountable is one of the single biggest operational barriers to the wide-scale adoption of unproven AI models in critical infrastructure. Trust is earned through transparency and effective human governance.

Automation Bias: Preventing Blind Reliance

Automation Bias is the cognitive trap where users over-rely on automated systems, leading to errors of omission when a system's output is incorrect. How can professionals be trained to avoid this dangerous over-reliance on AI tools? Mr. Inanga proposed a striking, albeit provocative, solution based on preventative education.

“Hmm. I think it’s quite simple. Before committing to any document, solution, or platform, have AI show similar projects, similar finished products that resulted in mistakes made with dire consequences just because the guard rails were not put in place. It’s almost the same as scaring teens into abstinence by showing them pictures of body parts infected with sexually transmitted infections.”

In essence, he advocates for a form of "failure-based training," where the risks are shown vividly and emotionally so that professionals never forget the actual cost of blind trust and complacency. This moves beyond abstract warnings to concrete, impactful case studies.

Explainability: Simpler Transparency or Complex Accuracy?

The field of Explainable AI (XAI) deals with making AI decisions understandable to humans. When presented with the classic trade-off between a highly accurate, complex 'black-box' model and a slightly less accurate but simpler, transparent model, his answer was clear and immediate.

“Simpler is better. Once you know for sure how the food is made, what ingredients went into it, then troubleshooting becomes a lot easier. Being able to tinker and tamper becomes easier too.”

Mr. Inanga suggests that for high-stakes human applications, debuggability and transparency fundamentally outweigh marginal gains in predictive accuracy. The ability for a human operator to audit, understand, and intervene in the decision-making process is critical to maintaining ethical standards and operational safety.

Algorithms Codifying Injustice: Fix or Govern?

Bias embedded in training data is one of the greatest, most pressing threats to fairness and equality in AI applications, threatening to codify historical injustice. Mr. Inanga firmly emphasized that purely technical fixes are insufficient to solve a fundamentally social problem.

“For sure, governance and community review. Us humans are supposed to be the guardians of the last frontier. Overreliance on technical fixes again places the control and trust in the machines, which makes NO sense, seeing that’s why we ended up ‘here’ in the first place!!”

The solution, to him, is rooted in robust human responsibility, collaborative governance frameworks, and continuous community oversight, not merely abstract algorithmic quick fixes that often mask deeper systemic issues.

Personal Verdict: Where Would You Trust AI First?

To conclude, we asked Mr. Inanga for his personal view on where AI currently belongs, and which high-stakes field is most ripe for trusted integration.

“I’ll trust AI first in Finance mainly because this is a numbers game and data is hardly ever subjective when it comes to finance. At the end of the day, it’s either you have the money or you don't!! 😃”

His perspective highlights a broader reality: some fields, particularly those built upon highly quantifiable and less subjective data points like financial markets, are inherently more suited for early AI integration, while domains like law and medicine demand a far greater degree of caution and human supervision due to subjective human elements.

Conclusion: The Human Frontier

Mr. Inanga’s insights cut through the technical jargon, moving past the excitement over model size to expose the raw, often uncomfortable human challenges of AI adoption. His detailed answers reinforce a central theme of Emotion Encoded's research: the long-term future of AI will not be shaped by algorithms alone.

Instead, the future will be defined by the people who must choose when to trust these systems, when to question their output, and, most importantly, when to push back and exercise their ultimate human oversight. Responsible integration demands vigilance, transparency, and a commitment to human-centric ethical guardrails.