Emotion Encoded Research Initiative

❕Research Results in St. Kitts and Nevis ❕

Parents, Teachers, and Artificial Intelligence in the Classroom: Trust .... BUT With Conditions

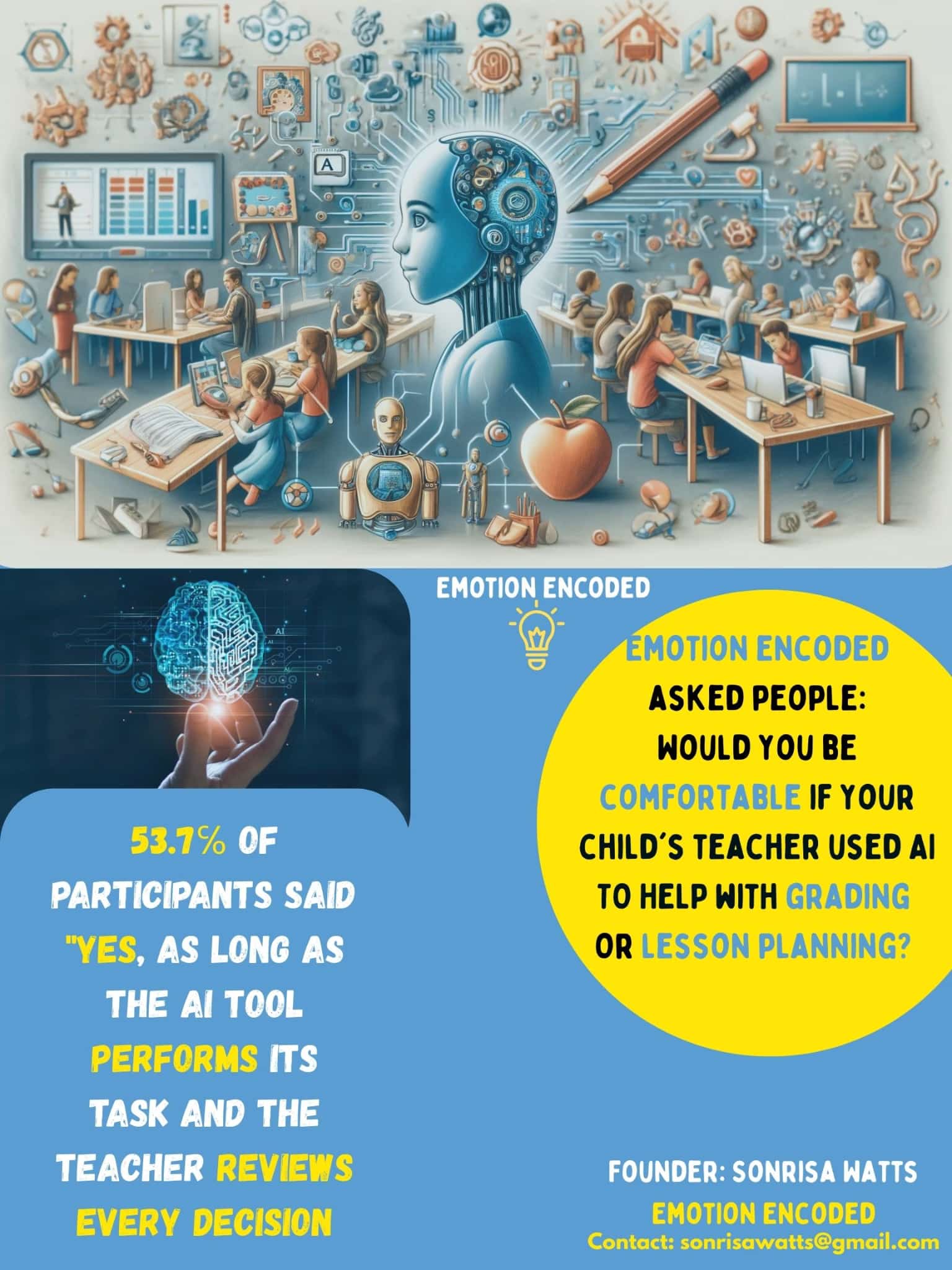

As part of my research initiative Emotion Encoded, I asked participants a simple but important question: *“Would you be comfortable if your child’s teacher used AI to help with grading or lesson planning?”*

The responses depicted something powerful:

53.7% of parents said “yes,” but only under certain conditions. They were open to AI as long as it performed its tasks and the teacher reviewed every assignment and decision. This suggests that parents prefer AI as a support tool rather than a replacement. It reflects automation bias, where people are willing to rely on automated systems but still want human oversight. It also highlights the value parents place on human reasoning, judgment, and rationale in education.

26% of parents said “no.” This group showed resistance, signaling a lack of trust in AI for something as critical as a child’s education. Their responses align with algorithm aversion, a tendency to distrust automated systems, and may also involve status quo bias, as many parents prefer the familiar approach of teacher-led grading and lesson planning.

11% of parents said “yes” without conditions. For these participants, AI was seen simply as another tool in the classroom rather than a potential threat. Their acceptance may have been influenced by the framing effect, where presenting AI as an assistant rather than a replacement helped build trust.

The conditional trust parents display in AI use within education points to a larger message: the successful integration of AI requires attention to human psychology, not just system performance. These findings emphasize a key theme in my research initiative: AI adoption is deeply tied to how people emotionally and cognitively negotiate trust with these systems.

Conditional trust for AI in the classroom from parents signals a bigger message. For AI integration into educational systems, psychology is important.

*Status quo Bias? Automation Bias vs. Algorithm Aversion? Or Framing Effect?*

This echoes a larger theme in my research initiative: AI is not simply about performance; it’s about how people emotionally negotiate trust with these systems.