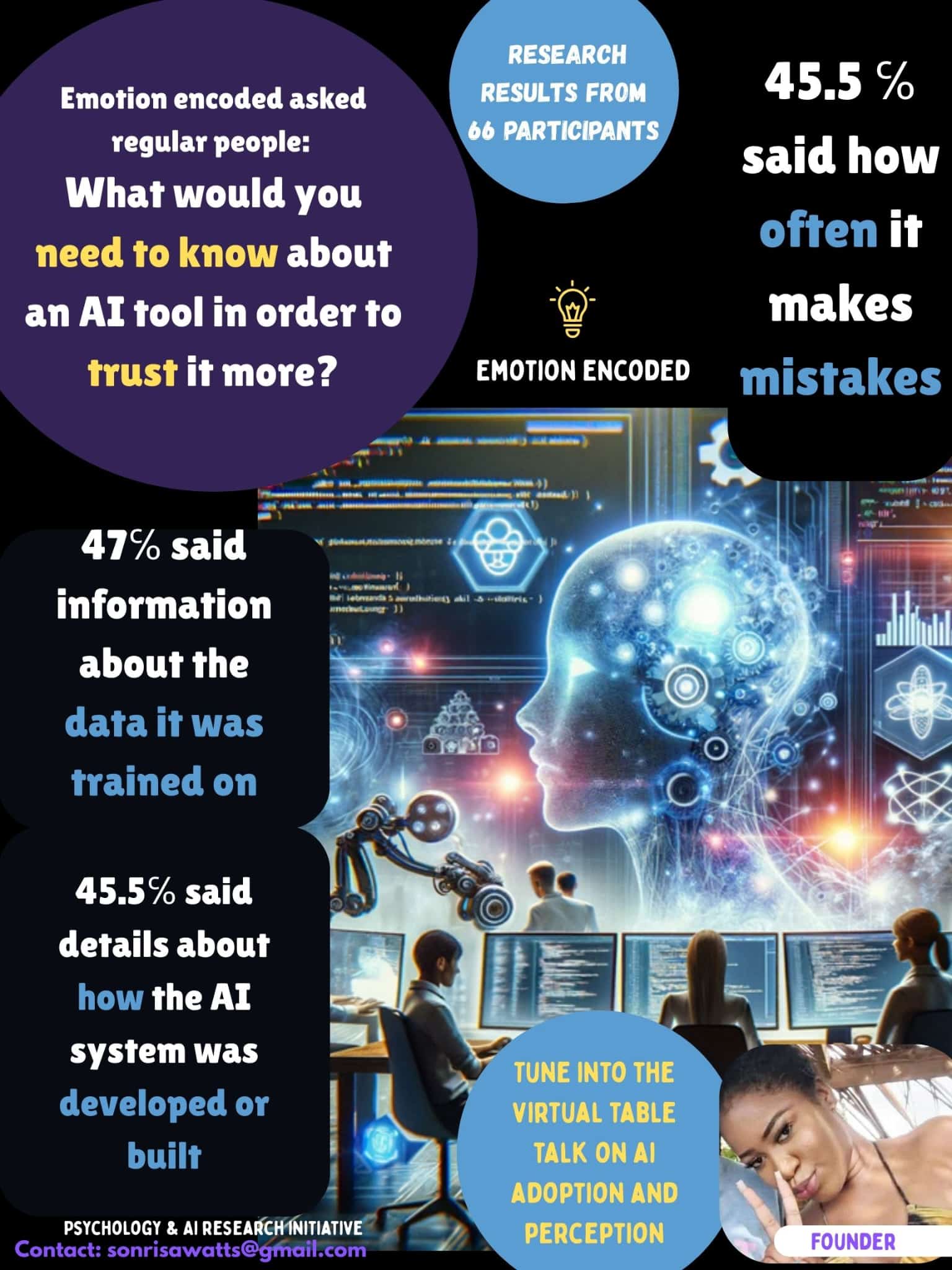

In an Emotion Encoded survey, I asked 66 people:

“What would you need to know about an AI tool in order to trust it more?”

The responses to this question highlight that trust in AI is not automatic. It must be earned. People do not simply accept technology because it works—they demand conditions that make them feel secure, informed, and respected.

- 47% want clear information about the data the AI was trained on. People care about the foundation of the system, what it has learned from, because this determines whether it can be biased, fair, or representative of reality.

- 45.5% want details about how the AI works. They do not necessarily need to read code, but they want a digestible explanation of the logic and mechanics behind decisions.

- 45.5% also want to know how often the AI makes mistakes. Accuracy alone is not enough. Users want transparency about failure rates and limitations, not just success stories.

- 43.9% said they would trust AI more if a human was still involved in the process. This suggests that many people still need the reassurance of human judgment, especially when outcomes directly affect lives.

- 21.2% cared most about who created the AI. This shows that the brand behind a tool is less important than how it performs and explains itself.

- 22.7% admitted they could “never fully trust AI,” revealing a baseline skepticism that may never be overcome, no matter the design.

Tech companies have tried to address these fears by promoting Explainable AI (XAI). This is a set of tools and frameworks designed to clarify AI decision-making and reduce the fear of the black box. Influential tech giants and policymakers have treated XAI as the cure to public distrust. The reality, however, is more complex. Simply offering algorithmic explanations does not automatically create trust.

From a psychological perspective, trust in AI is less about liking machines and more about reducing uncertainty. Humans are naturally risk averse. We build trust in one another by showing consistency, reliability, and accountability over time. The same principle applies to AI. People feel safer when they know how systems make decisions, when they have evidence of accuracy and error rates, and when they see that a human can intervene if necessary.

These findings echo a deeper truth. Trust is built through transparency, accountability, and reliability, not marketing or promises of perfection. Without these qualities, even the most statistically accurate AI systems risk rejection. Once rejected, the potential benefits of the technology in healthcare, law, or education can be lost entirely.

Ultimately, building trust in AI is not just a technical challenge but also a psychological one. Trust must be earned through openness, through admitting limitations, and through respecting the human need for clarity and control. AI that fails to meet these conditions will continue to face resistance, no matter how advanced it becomes.