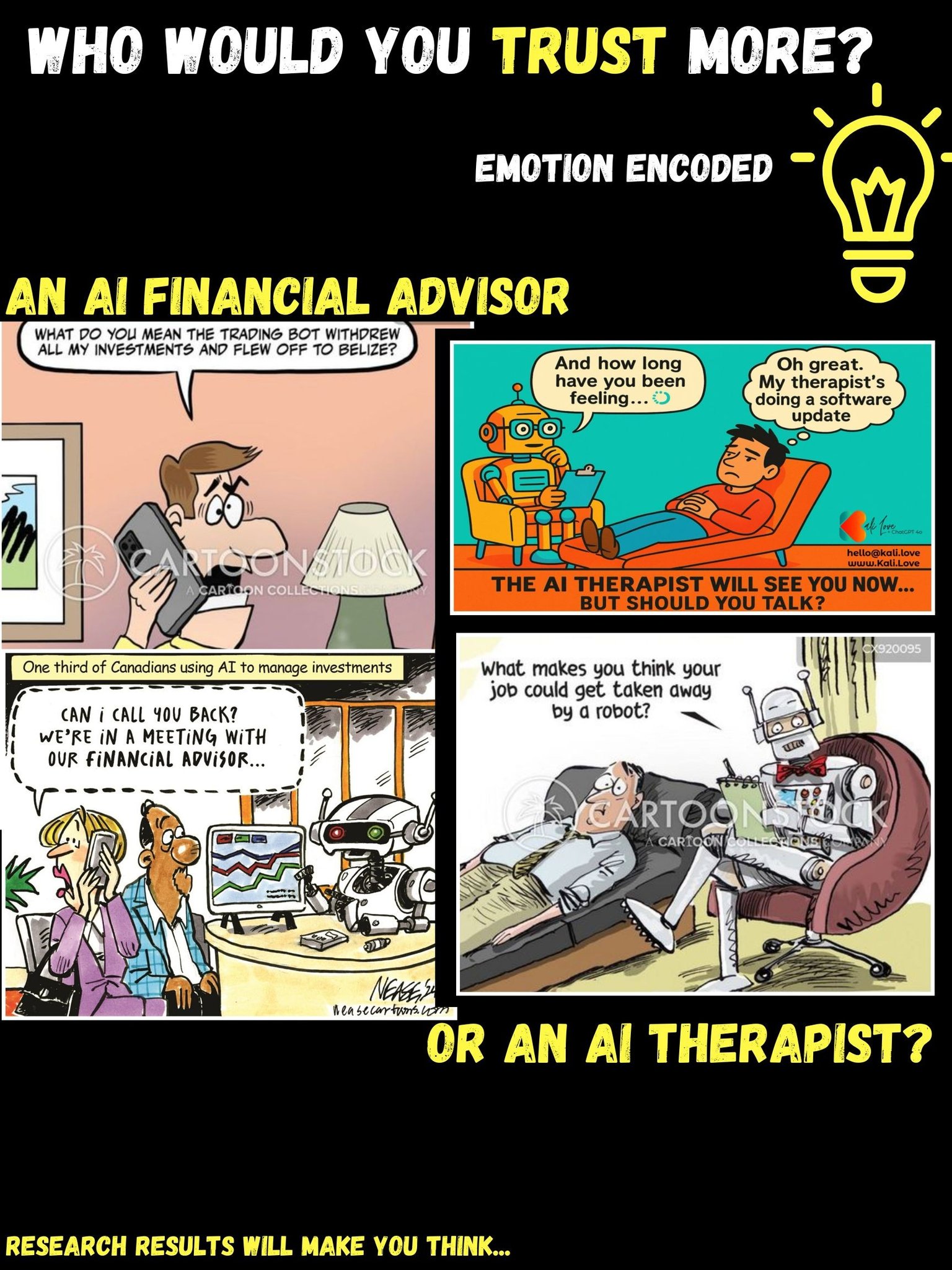

“Who would you trust more: An AI Advisor or an AI Therapist?”

The answers were telling.

“Financial advisor is a little too private for me.”

“I prefer a human to deal with my money.”

“Therapist errors are less likely to be lethal.”

“Although both should be private, an AI therapist can draw on a large resource and apply it to challenges I may be experiencing, whereas finances are more personal.”

“Therapist work may involve actual human feelings and rational thinking, while financial advice is based on numbers and their interpretation.”

“A therapist needs a certain level of emotional intelligence that AI has not developed.”

“Finance is numbers. I can sort out my own feelings.”

“Therapist has a lot more nuances and human components that robots can’t completely understand.”

What This Reveals

These responses highlight something bigger than just preferences...they expose how people weigh risk and trust differently across domains.

For some, money feels too intimate to hand over to a machine. To them, a wrong move from an AI advisor could mean real, irreversible losses. On the other hand, therapy was sometimes seen as safer for experimentation: an AI can pull from vast psychological resources, and if it’s ‘wrong’, the consequences may not feel as severe as financial errors.

Others saw the opposite. Therapy requires empathy, nuance, and human warmth—qualities AI cannot truly replicate. Financial advice, being grounded in data and numbers, was perceived as something AI should be better at.

This tension shows that trust isn’t automatic; it’s situational. People ask: *What’s at stake? What can go wrong? What do I lose if the AI makes a mistake?*