🧠 Why Wouldn't People Blame AI Developers?: Insights from Our Research 🧠

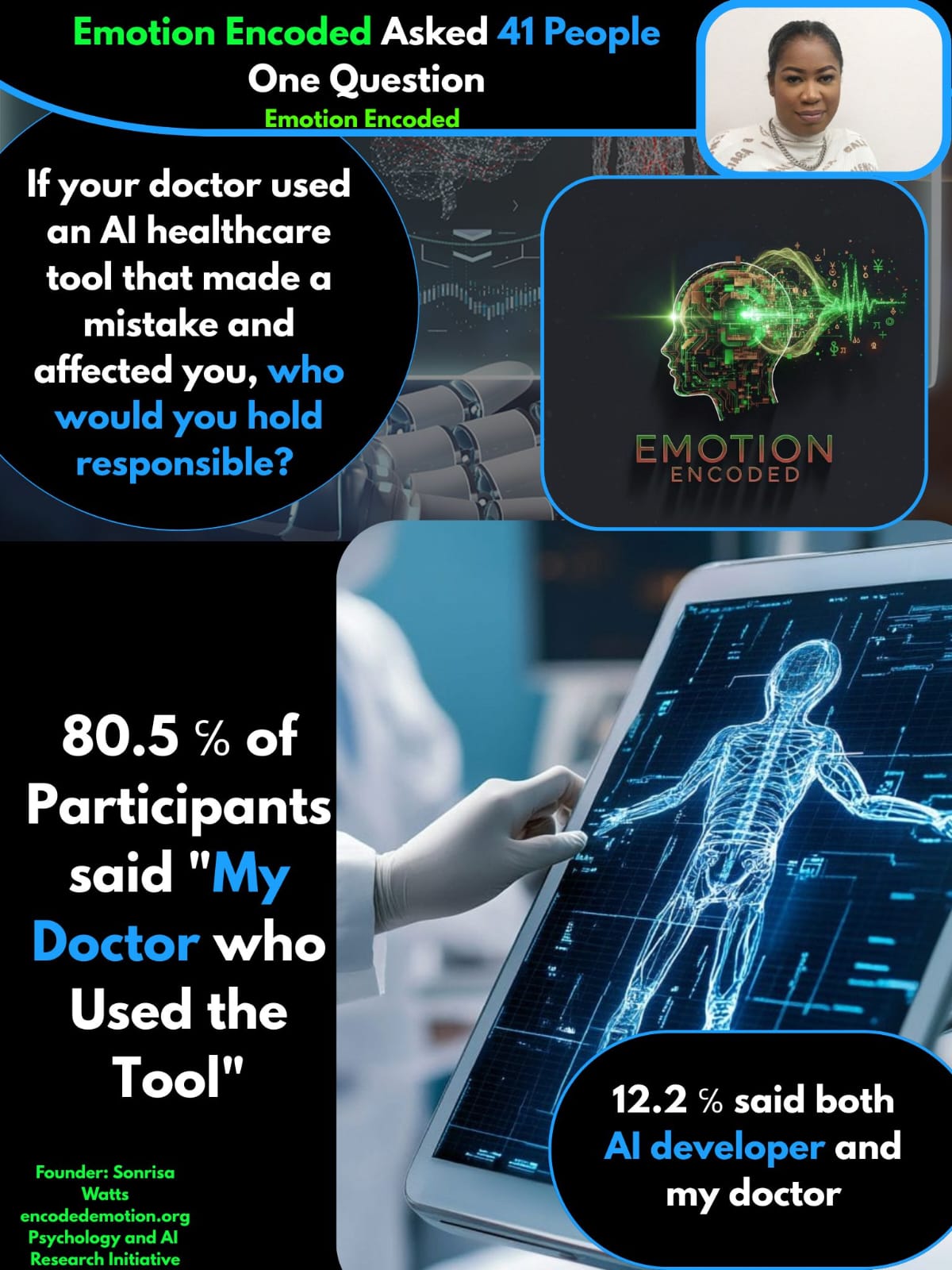

At Emotion Encoded, we recently asked participants a seemingly simple question: If your doctor used an AI healthcare tool that made a mistake and affected you, who would you hold responsible? Out of 41 respondents, 80.5% blamed their doctor, while none blamed the AI developer. A small fraction (12.2%) held both responsible.

At first glance, this result may seem surprising. Why wouldn’t people hold the creators of AI accountable, especially in high-stakes contexts like healthcare? Psychological research offers some explanations.

The Proximity Bias

Humans tend to hold those closest to the event more accountable. In this scenario, the doctor is the direct agent, the one who made the final decision, while the AI developer is distant and abstract. Even if the tool caused the error, people instinctively focus blame on the human face of the interaction.

Trust in Expertise

There is also a dimension of delegated responsibility. Participants may perceive doctors as the ultimate gatekeepers, whose expertise should override or catch AI mistakes. The AI tool is seen as an instrument, not an independent actor, which reduces perceived developer liability.

Why AI Developers Should Also Be Held Responsible

AI developers design the algorithms and training data that guide these tools. If errors arise from flawed programming, biased datasets, or poor validation, the developers bear responsibility for foreseeable consequences. Additionally, developers have a duty to create tools that are safe, transparent, and understandable for the professionals using them. Failing to meet these standards can directly contribute to mistakes in real-world applications.

At Emotion Encoded, we continue to explore these emotional and cognitive dynamics, seeking insights that can bridge the gap between technological innovation and human expectations.

#ArtificialIntelligence #AIinHealthcare #ResponsibleAI #AIAccountability #CognitiveBias #HumanDecisionMaking #TechResponsibility #AIResearch #TrustInAI

Picture References

- AIU Article: Atlantic International University. (n.d.). Artificial intelligence in healthcare [Image]. AIU. Retrieved from https://www.aiu.edu/innovative/artificial-intelligence-in-healthcare/

- SDBJ Article: San Diego Business Journal. (n.d.). The AI doctor-patient experience [Image]. San Diego Business Journal. Retrieved from https://www.sdbj.com/healthcare-2/the-ai-doctor-patient-experience/