What Happens to Trust When Lawyers Use AI in Secret?

By Emotion Encoded

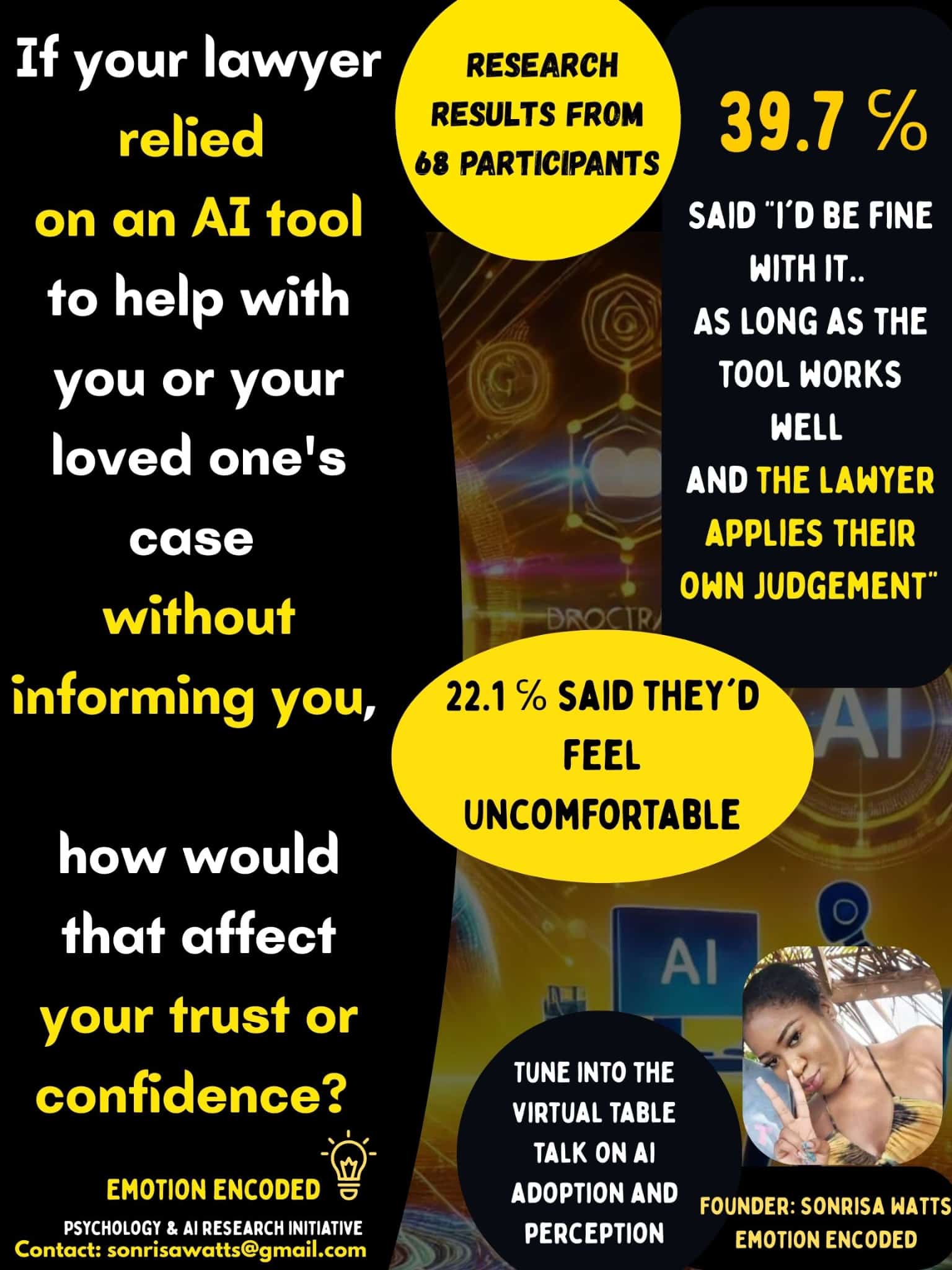

In a survey of 68 people, Emotion Encoded asked:

“If your lawyer relied on an AI tool in your case without informing you, how would that affect your trust?”

The answers were interesting. Nearly 40 percent said they would be comfortable only if the lawyer still applied their own judgment. About a fifth admitted they would feel uneasy with the lack of transparency. A smaller group said they would feel angry or betrayed, while others weren’t surprised at all, assuming AI was already quietly at work behind the scenes.

The message is clear: accuracy alone does not create trust. A legal AI can be almost perfect in its calculations, but people are not reassured by perfection. They are reassured by openness, honesty, and the sense that a human being still carries the final responsibility.

The Psychology Behind the Reactions

When people hear that AI was involved without their knowledge, the discomfort may come from a sense of disappointment. Maybe the clients thought the professionals were knowledgeable enough to resist the use of AI. Trust depends on believing that the person you’ve hired, your lawyer, your doctor, your advisor, is fully accountable to you. If something else is influencing their decisions in secret, that expectation cracks.

Psychologists call one side of this problem automation bias, where people tend to accept AI’s suggestions without much scrutiny simply because a machine produced them. But there’s an opposite pull at work as well: a natural resistance to being left in the dark. Even if the AI is correct, people want to know it was used, so they can weigh its role in the outcome.

There’s also the framing effect. The way we describe the role of AI changes how it feels. Saying a lawyer is “supported by AI research tools” conveys partnership. Saying a lawyer “let AI make decisions” signals a loss of control. Subtle shifts in language shape whether people feel reassured or betrayed.

Is Human Judgment Becoming More Valuable?

In high-stakes fields like law, people don’t just want efficiency or speed. They want accountability and reassurance that a human mind is guiding the process. Ironically, the rise of AI is not reducing the value of human judgment at all. It is making it more precious. Clients want to know that when technology is used, it is filtered through human reasoning and care.

The use of advanced tools isn't just about efficiency for clients; it's about trust. It's about whether professionals stay honest, transparent, and ultimately responsible for the decisions that affect people’s lives.