When AI Disagrees with Your Doctor: Who Do You Trust?

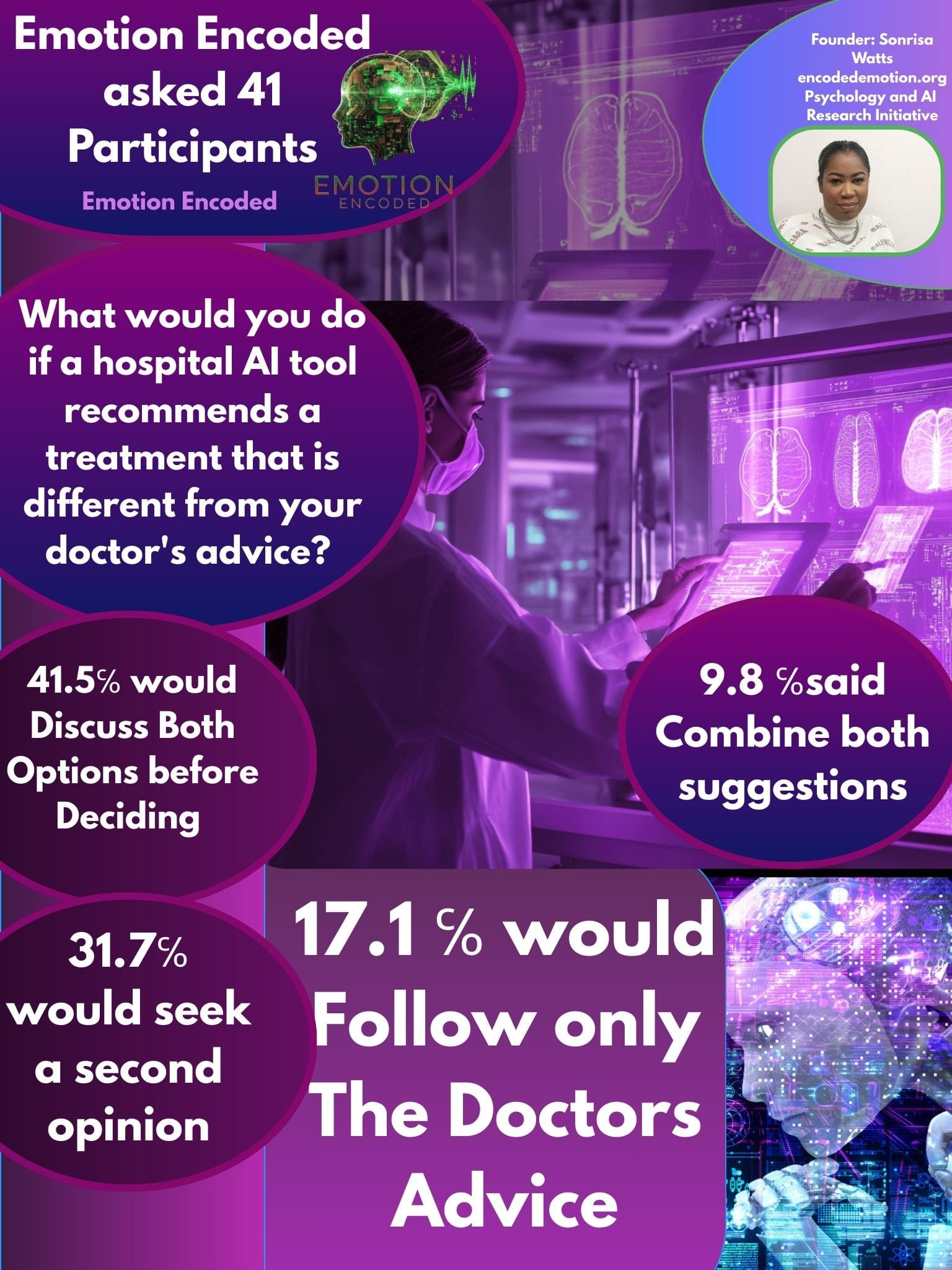

Emotion Encoded asked 41 participants a simple but unsettling question: “What would you do if a hospital AI tool recommended a treatment that was different from your doctor’s advice?” The answers revealed something deeper than statistics. They revealed a struggle with trust.

- 41.5% said they would discuss both options before deciding.

- 31.7% said they would seek a second opinion.

- 17.1% said they would follow only the doctor’s advice.

- 9.8% said they would combine both suggestions.

At first glance, these numbers look like preferences. But beneath them is a shift in how people think about expertise, risk, and authority. For centuries, the doctor’s word was final. Yet here, fewer than 1 in 5 participants would accept their doctor’s advice without hesitation when AI presents a different path. Instead, most people wanted conversation, validation, or further proof. Some even considered blending human and machine recommendations into a single approach. This suggests that AI is not simply a tool in healthcare. It is a new actor in the decision-making process, one that patients cannot ignore.

What does this mean for the future?

It means trust is no longer binary. It is not about either trusting your doctor or the AI. Instead, trust becomes a negotiation between human expertise, machine precision, and the patient’s own intuition.

In high-stakes fields like medicine, this negotiation could reshape everything. Patients may demand more transparency. Doctors may need to defend their reasoning against algorithms. AI developers may be forced to design systems that explain their choices, not just output them.

At Emotion Encoded, we see this not as a valuable insight. People are telling us: we do not blindly trust anymore. But what about trusting solely clinical intuition? This sets the blueprint for how AI must evolve if it is to coexist with human judgment.

Because the real question is not just “Doctor or AI?” The real question is: how do we create a world where patients feel secure in choosing either, or both?