AI and Mental Health Insights from a Pastoral Counsellor

St. Kitts 🇰🇳

To ensure the candidness of our interviewees and to protect their professional privacy on this sensitive topic, all participants were granted anonymity

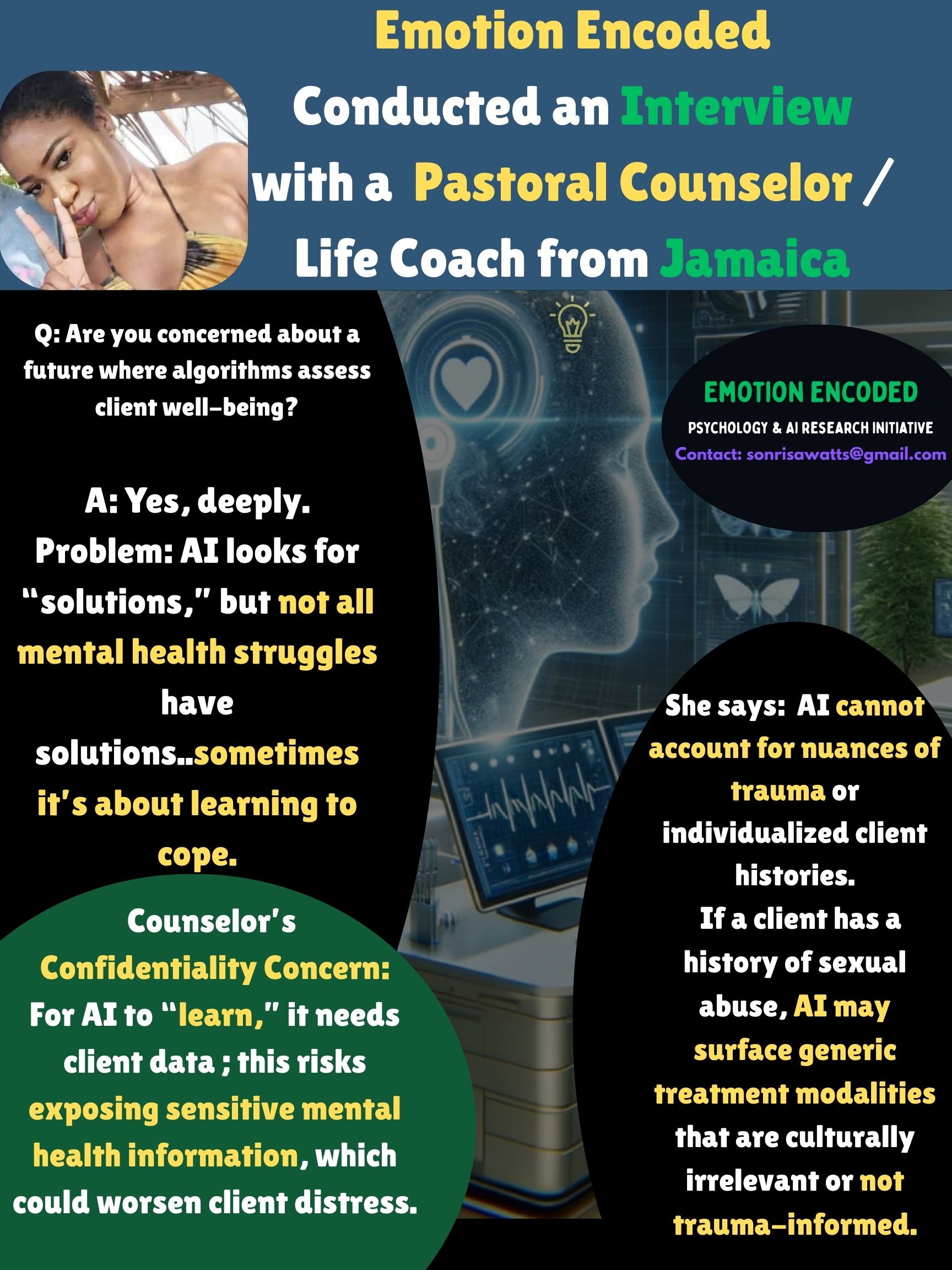

As Generative AI becomes more and more popular, Emotion Encoded set out to gain perspectives from mental health professionals... In pastoral and therapeutic settings it raises profound questions about trust, empathy, and human connection. Her reflections reveal how deeply psychological biases shape professional attitudes toward AI.

Everyday Use vs. Professional Boundaries

The counsellor uses AI personally for tasks like booking travel, customer service, and scheduling; areas where efficiency outweighs risk. In her practice, however, she draws the line at AI interpretation or guidance.

Trust and Transparency

When asked what makes technology trustworthy, she emphasized context and cultural relevance. AI lacks the ability to read the nervous system, body language, or spiritual cues that counselors rely on. One can say that this reflects algorithm aversion: even if an AI produces accurate outputs, professionals hesitate to trust it because it cannot explain itself in human terms. But is accuracy valid here? Has Artificial Intelligence reached the level where it can connect emotional responses with body cues? Could a generative AI even achieve emotional intelligence?

The Illusion of Control

For the counsellor, the danger lies in AI’s tendency to push for solutions. But in mental health and pastoral care, not all struggles have solutions. Many require learning to live with suffering, finding resilience, or seeking spiritual grounding. Expecting AI to “fix” complex human realities illustrates the illusion of control, where algorithms create a false sense of mastery over human complexity.

Fear of Codifying Injustice

She raised deep concerns about trauma and cultural sensitivity. If a client has a history of sexual abuse, AI could pull from generalized internet sources that erase individuality and choose the wrong treatment modalities. That’s the distinction between trained mental health experts and... robots. This highlights the fear of codifying injustice: algorithms risk reinforcing biases and offering one-size-fits-all answers to unique, vulnerable situations.

Confidentiality, empathy, and discernment are inherently human, and cannot be delegated to machines. While AI may serve as a tool for administration, it cannot replace the sacred trust of human care.