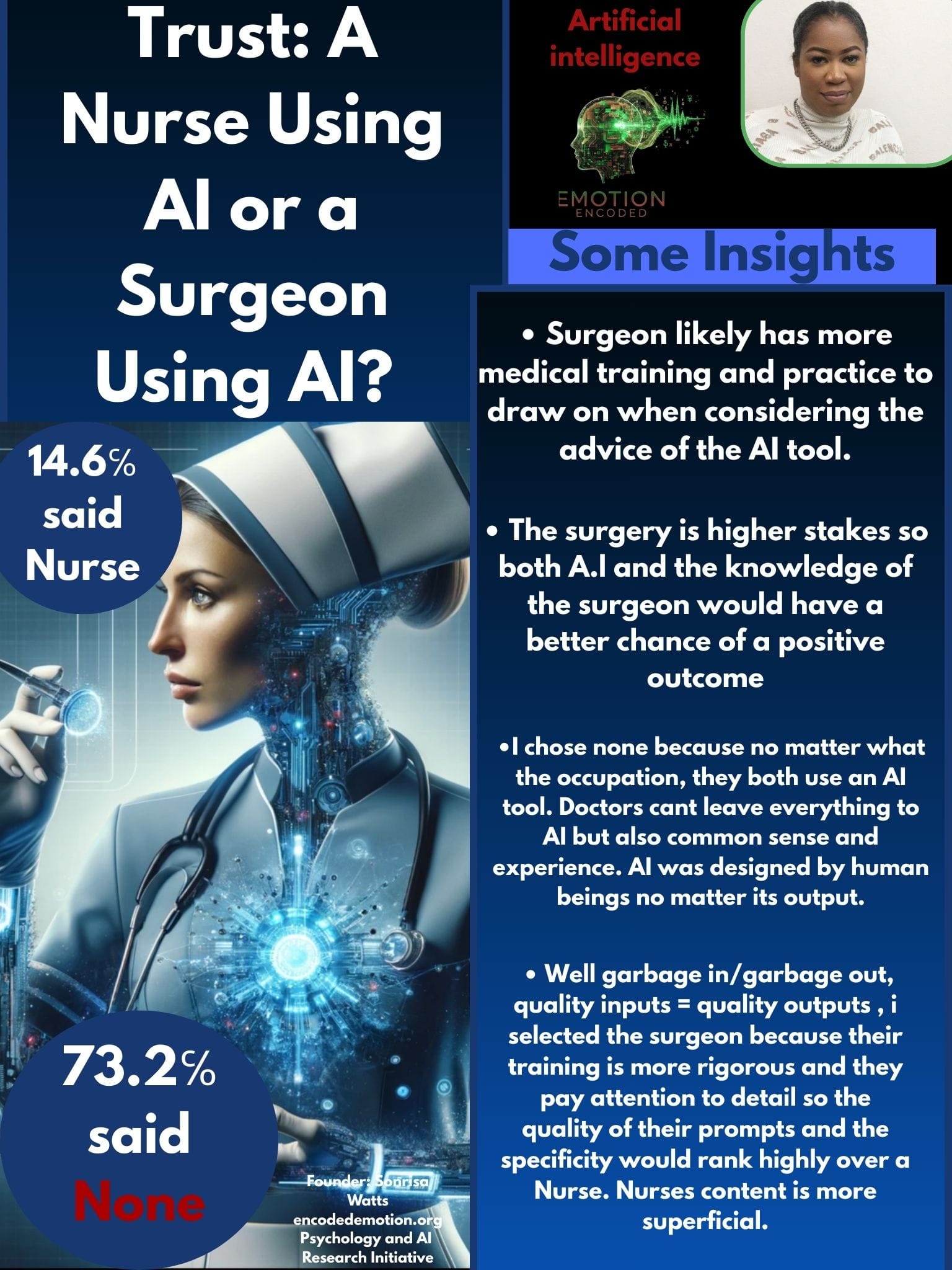

We asked a simple question: if an AI tool made a clinical suggestion, would you trust a surgeon or a nurse more when they used that tool? Thirty percent of respondents answered that neither professional should be trusted if they are relying solely on AI. The pattern reveals a core tension in public perception. People value professional judgement first and view AI as an auxiliary aid.

"None if I wanted an AI opinion I would go to the AI. When I need a doctor or nurse help I am there for them. If they use the tool without my knowing then that is a different story. The doctor should already have the knowledge to assist." — survey respondent

This result has two immediate implications. First, trust in health professionals does not automatically transfer to trust in the tools they use. Second, transparency matters. Patients want to know when AI is part of the decision process.

We contrasted clinical trust with opinions about AI in financial roles. When asked whether they would trust an AI fraud detector or an AI loan officer more, responses showed similar caution. People are sceptical about automated decisions that touch on rights and resources, and they expect human oversight.

- Majority of respondents selected none when asked whether they trusted a clinician using AI more than the clinician alone.

- When AI outputs are accompanied by numbers or confident-sounding explanations respondents often express greater confidence, even when those numbers may hide dataset gaps.

- Trust splits by perceived stakes. People tolerate AI in monitoring or administrative roles more than in diagnosis or life critical decisions.

Why does this matter Researchers in psychology point to cognitive biases that explain why people distrust AI or alternatively over-trust it. Algorithm aversion makes professionals wary after a single high-profile mistake. Automation bias means people can over-rely on outputs when they appear precise. The illusion of explainability occurs when simple explanations give a false sense of understanding. These layers combine to shape public perception.

From a design perspective one practical takeaway is simple: show the limits not only the strengths. Explainable interfaces should include provenance, confidence intervals, and clear caveats about dataset coverage. A tool that displays what it does not know will improve the chance that clinicians will use it appropriately.

Our survey suggests a policy implication too. If institutions plan to deploy AI at scale they must invest in training, transparency, and liability frameworks. People want both expert judgement and accountable systems. That means clear rules for disclosure, audit trails, and a governance structure that assigns responsibility clearly when errors occur.

We include this image and cite Nathan Blake for his piece on the computational nurse and how new tools are reshaping clinical workflows. See credit below for the original article.

Emotion Encoded will continue to study how confidence in AI tools interacts with professional roles. If you are a clinician, legal practitioner or financial manager and you would like to share your perspective please reach out via our website.