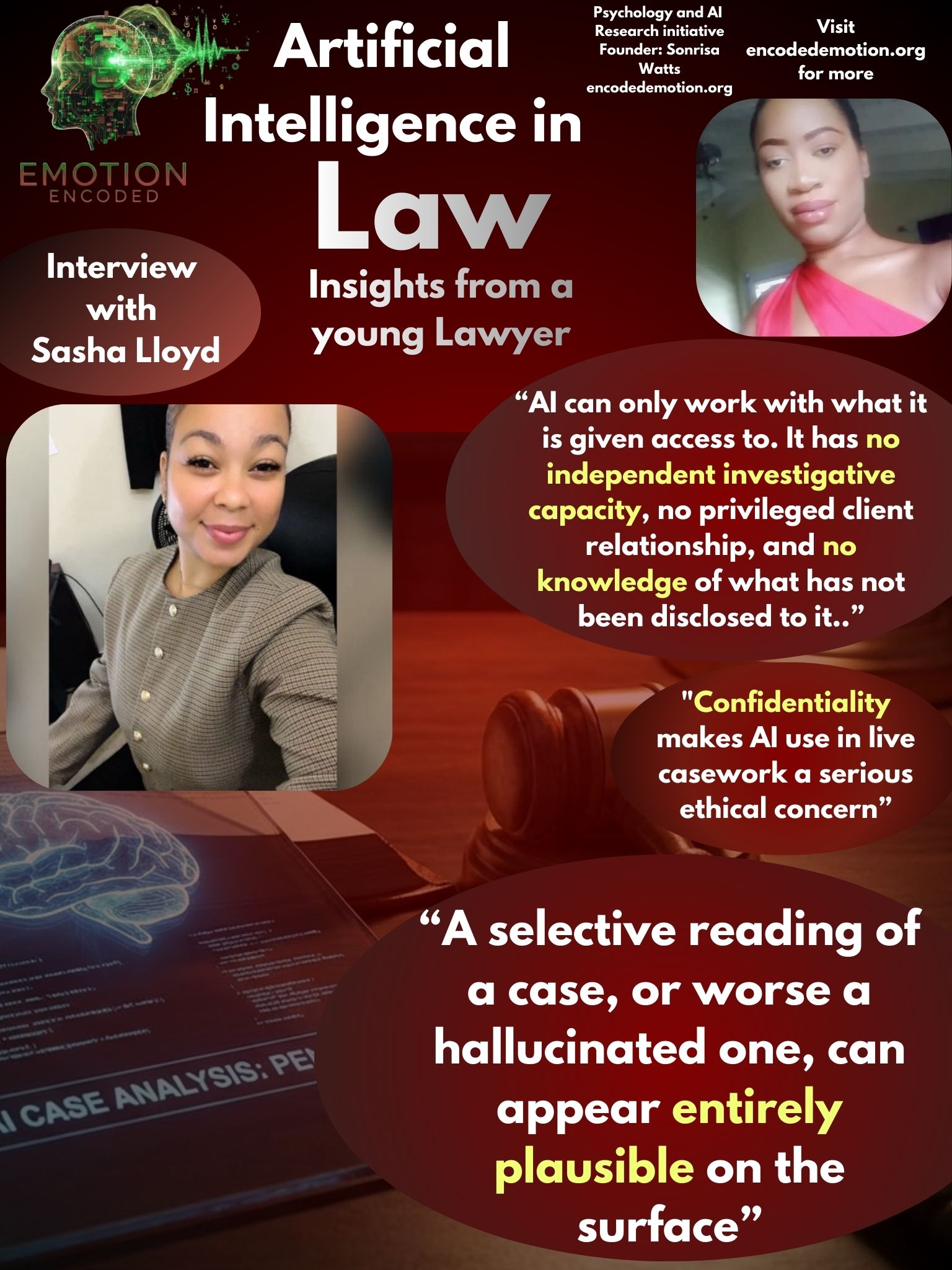

Artificial Intelligence in Law: Insights from Sasha Lloyd

I asked a young lawyer some questions for my Emotion Encoded research initiative! I just got some incredible insights from Sasha Lloyd that really challenge how we think about the "black box" of AI in professional fields.

The Path vs. the Destination

I started by asking her if she’d rather have a fast answer or one that actually proves its work. For Sasha, there isn't even a debate. She said:

"The latter, without question. Transparency in legal reasoning is non-negotiable; you need to see the path, not just the destination. The practical concern, however, is that most authoritative legal resources are not freely accessible. Platforms like Westlaw, LexisNexis, and even regional databases like the Eastern Caribbean Supreme Court portal operate on paid subscriptions. So an AI confidently citing a case is one thing, but whether it can actually link you to a verified, full text source you can open and read is a very different matter. Even where a citation is real, it may refer to an entirely different case or legal context than the one being discussed, which introduces its own significant risk."

What should AI never touch?

When we talked about what AI should never touch, Sasha pointed out that the tech is fundamentally limited by what it can't see or "feel" out in a relationship. Her take was:

"When examined critically, the question largely answers itself. AI can only work with what it is given access to. It has no independent investigative capacity, no privileged client relationship, and no knowledge of what has not been disclosed to it. On the question of precedent and moral reasoning, courts follow established legal principles and AI can certainly identify and map those patterns. As for emotional connection to a client, that is not strictly a professional legal function in the formal sense. The more precise concern is that AI's limitations are fundamentally rooted in issues of access and context, and any meaningful application of it within legal practice must be approached with a thorough and clear-eyed understanding of those constraints."

The Reality of Verification

I also wanted to know how she actually verifies AI outputs, and she brought up a massive point about ethics and why summaries are so dangerous. She explained:

"The starting point is that confidentiality makes AI use in live casework a serious ethical concern. Feeding privileged client information into a third party system risks breaching professional obligations, and so active casework remains off the table entirely. Where AI does surface a case citation in another context, the verification process is straightforward: locate the actual judgment and read it in full. A selective reading of a case, or worse a hallucinated one, can appear entirely plausible on the surface. The ratio, the full procedural history, and whether the matter was subsequently appealed or overturned are details that do not survive a summary. The source must always be consulted directly."